Opportunities to govern: How to increase the supply of moderate and competent candidates

The state of American politics would be generally improved, many argue, if more moderate and more qualified people served in government. In this paper, we…

The state of American politics would be generally improved, many argue, if more moderate and more qualified people served in government. In this paper, we investigate what draws such individuals to run for elected office, focusing on a dimension of politics that has received scant attention within the candidate-entry literature—namely, the ability of candidates, once elected, to exercise meaningful influence and change public policy. In a ratings-based conjoint survey experiment, we find that the opportunity to wield greater authority increases moderates’ interest in seeking public office, whereas extremists appear indifferent; and that more qualified people express more interest in running for office when greater authority is vested in an office, the threshold needed to pass legislation is lower, and staff support is higher. These findings have broad implications for our understanding of political representation, government effectiveness, and the relationship between institutional reform and mass politics.

Which frame fits? Policy learning with framing for climate change policy attitudes

How should climate policy be framed to maximize public support? We evaluate the effects of five distinct message frames—scientific, religious, moral, and two economic (efficiency…

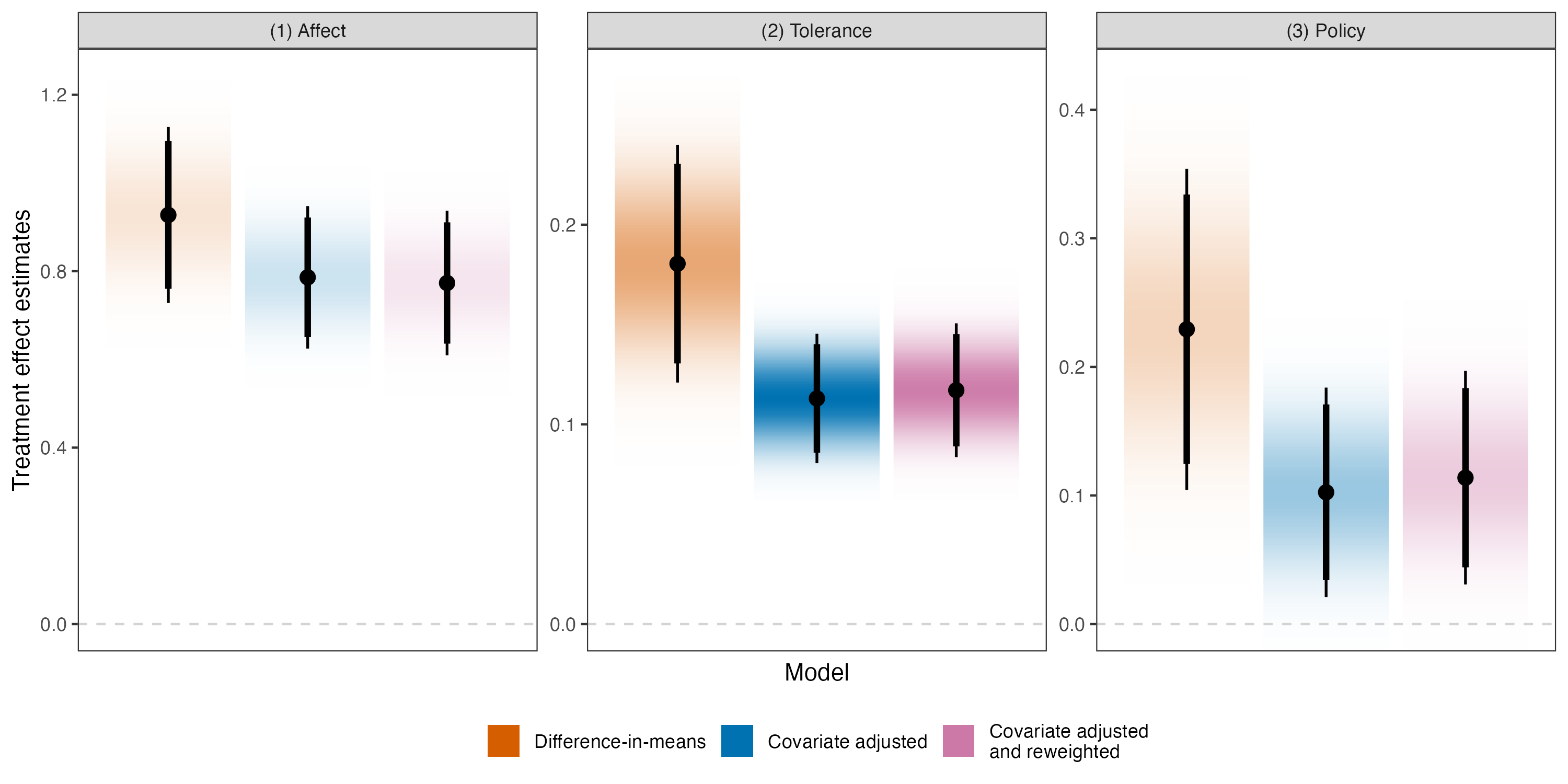

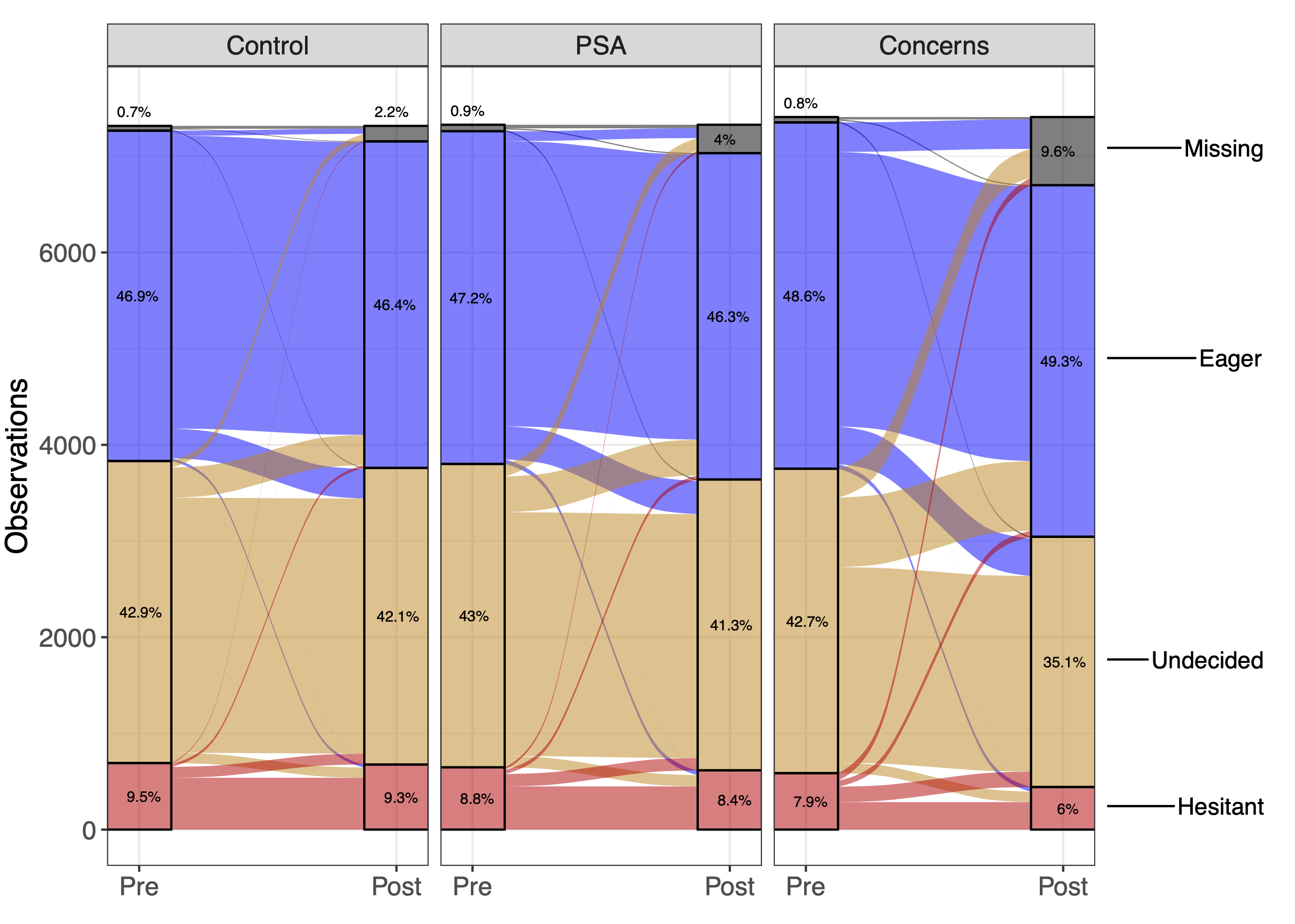

How should climate policy be framed to maximize public support? We evaluate the effects of five distinct message frames—scientific, religious, moral, and two economic (efficiency and equity)—on support for climate change policy using a randomized experiment with over 2,300 U.S. respondents. Moving beyond pairwise frame comparisons, we adopt a policy learning approach that identifies the most effective frame using cross-validated sample splitting, thereby avoiding selective inference. We find that the economic efficiency frame consistently yields the largest gains in policy support, outperforming both the control condition and all alternative frames. While the effects of personalized frame assignment were modest and not significantly different from assigning the best overall frame, we find consistent positive effects of the efficiency frame across partisan subgroups. Our findings offer methodological and substantive contributions: we provide a design for learning and validating optimal treatments in experimental framing studies, and we offer practical guidance for advocates seeking to increase support for climate action.

On the foundations of the design-based approach

The design-based paradigm may be adopted in causal inference and survey sampling when we assume Rubin’s stable unit treatment value assumption (SUTVA) or impose similar…

The design-based paradigm may be adopted in causal inference and survey sampling when we assume Rubin’s stable unit treatment value assumption (SUTVA) or impose similar frameworks. While often taken for granted, such assumptions entail strong claims about the data generating process. We develop an alternative design-based approach: we first invoke a generalized, non-parametric model that allows for unrestricted forms of interference, such as spillover. We define a new set of inferential targets and discuss their interpretation under SUTVA and a weaker assumption that we call the No Unmodeled Revealable Variation Assumption (NURVA). We then reconstruct the standard paradigm, reconsidering SUTVA at the end rather than assuming it at the beginning. Despite its similarity to SUTVA, we demonstrate the practical insufficiency of NURVA for identifying substantively interesting quantities. In so doing, we provide clarity on the nature and importance of SUTVA for applied research.

Gnostic notes on temporal validity

Kevin Munger argues that, when an agnostic approach is applied to social scientific inquiry, the goal of prediction to new settings is generically impossible. We…

Kevin Munger argues that, when an agnostic approach is applied to social scientific inquiry, the goal of prediction to new settings is generically impossible. We aim to situate Munger’s critique in a broader scientific and philosophical literature and to point to ways in which gnosis can and, in some circumstances, must be used to facilitate the accumulation of knowledge. We question some of the premises of Munger’s arguments, such as the definition of statistical agnosticism and the characterization of knowledge. We further emphasize the important role of microfoundations and particularism in the social sciences. We assert that Munger’s conclusions may be overly pessimistic as they relate to practice in the field.

Adaptive experimental design: Prospects and applications in political science

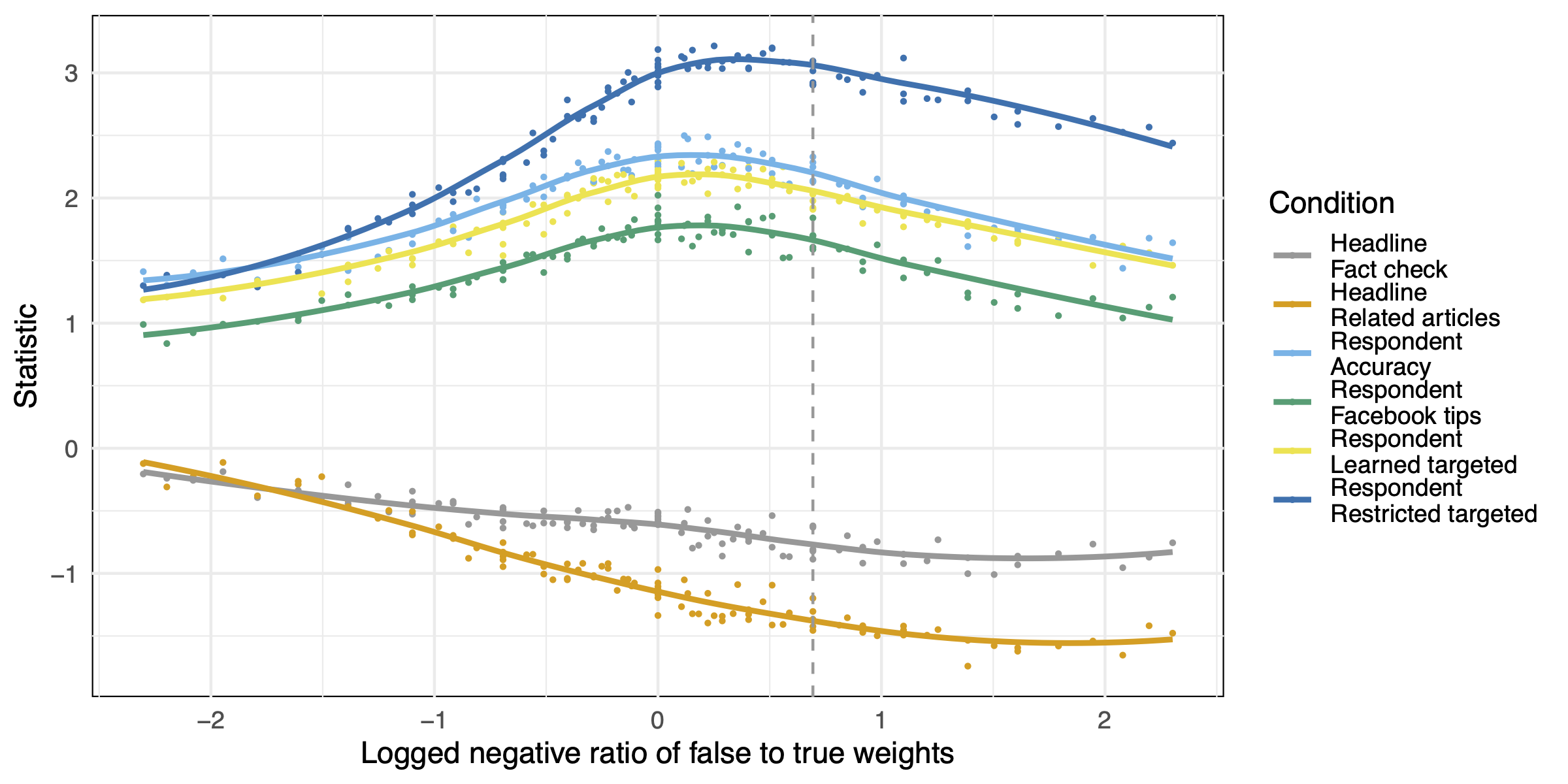

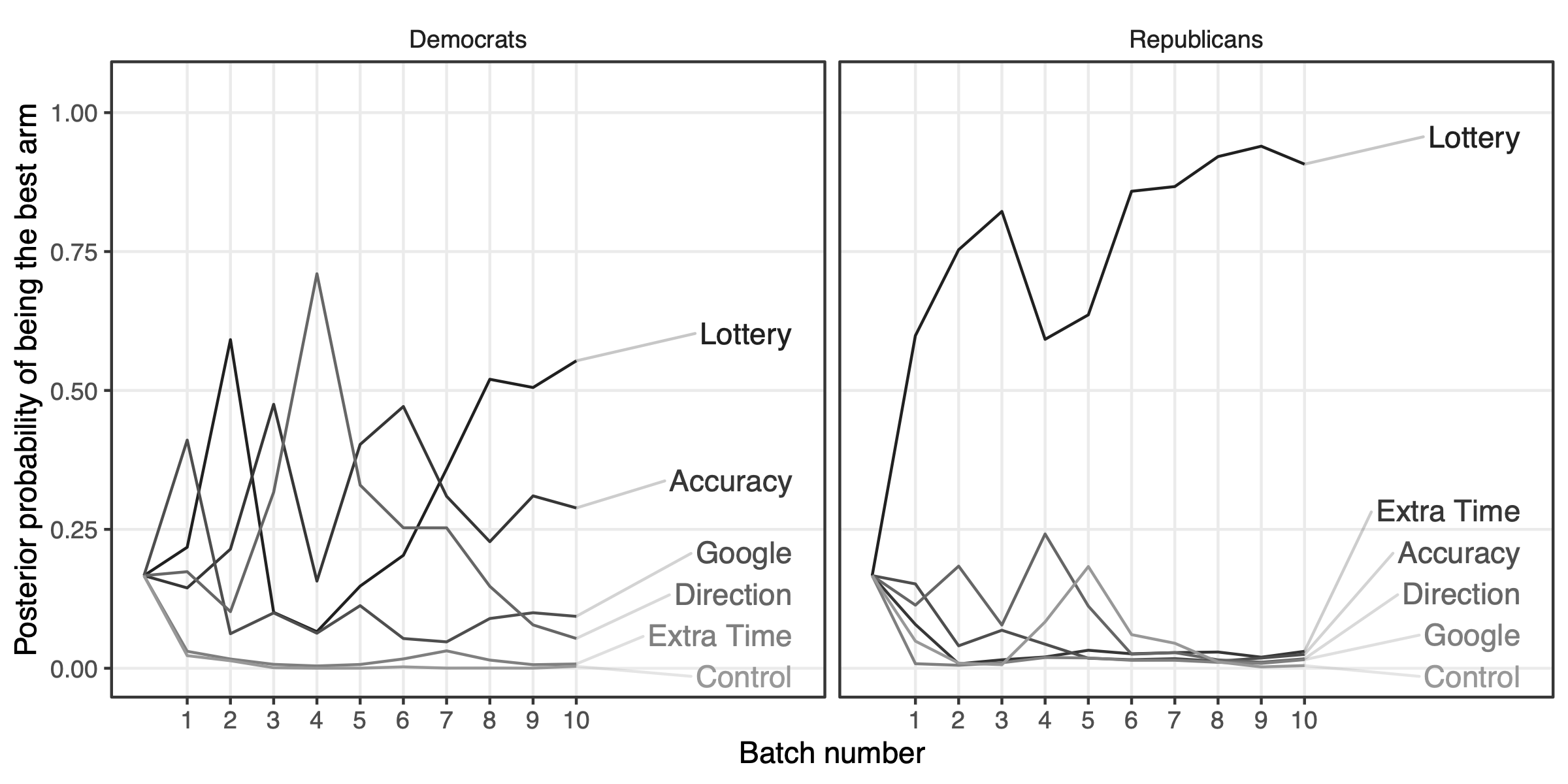

Experimental researchers in political science frequently face the problem of inferring which of several treatment arms is most effective. They may also seek to estimate…

Experimental researchers in political science frequently face the problem of inferring which of several treatment arms is most effective. They may also seek to estimate mean outcomes under that arm, construct confidence intervals, and test hypotheses. Ordinarily, multi-arm trials conducted using static designs assign participants to each arm with fixed probabilities. However, a growing statistical literature suggests that adaptive experimental designs that dynamically allocate larger assignment probabilities to more promising treatments are better equipped to discover the best-performing arm. Using simulations and empirical applications, we explore the conditions under which such designs hasten the discovery of superior treatments and improve the precision with which their effects are estimated. Recognizing that many scholars seek to assess performance relative to a control condition, we also develop and implement a novel adaptive algorithm that seeks to maximize the precision with which the largest treatment effect is estimated.

Understanding Ding's apparent paradox

(1) Rao type tests may be sub-optimal under nonlocal alternatives (Engle, 1984), and as Ding (2017) shows, the FRT is asymptotically equivalent to a Rao-type…

(1) Rao type tests may be sub-optimal under nonlocal alternatives (Engle, 1984), and as Ding (2017) shows, the FRT is asymptotically equivalent to a Rao-type test assuming constant effects. Rather than the FRT, a Wald-type analogue to the FRT using the difference in means exists (considered by Freedman, 2008, Samii and Aronow, 2012, Gerber and Green, 2012, and Lin, 2013) is a valid test of Fisher’s null, and does not suffer from the potential pathology of Rao-type tests. Furthermore, this Wald-type test—equivalent to a pooled variance two-sample z-test—is asymptotically equivalent to the FRT under local alternatives. Reichardt and Gollob (1999) discuss this point, with reference to Mosteller and Rourke (1973). (ii) As highlighted by Pratt (1964), Romano (1990) and Freedman (2008), the behavior of tests under incorrect working assumptions may depend on the joint distribution of the data. Analogous to the Behrens-Fisher problem, a test of the null of no average effect that assumes there is no effect on the variance may be more or less powerful than a test of no average effect that makes no such additional assumption. We illustrate this by comparing the Wald type analogue to the FRT to the standard Wald-type test of Neyman’s null. Combining (i) and (ii), we see that Ding’s (2017) apparent paradox follows from the use of a suboptimal test under nonlocal alternatives and the well-known behavior of tests of joint hypotheses when one of the constituent hypotheses is false, (iii) We also note an error in Ding’s (2017) Theorem 7 and suggest a refinement that is correct at full generality.